Palo Alto, April 8, 2025 – Vectara, a platform for enterprise Retrieval-Augmented Generation (RAG) and AI-powered agents and assistants, today announced the launch of Open RAG Eval, its open-source RAG evaluation framework. The framework, developed in conjunction with researchers from the University of Waterloo, allows enterprise users to evaluate response quality for each component and […]

Enhancing Retail AI with RAG-Based Recommendations

The retail industry needs more agile and adaptive recommendation systems. Retrieval-Augmented Generation (RAG) presents a promising solution. By combining the power of both information retrieval and generative AI, the RAG-based recommendation system ….

Generative AI’s Accuracy Depends on an Enterprise Storage-driven RAG Architecture

[SPONSORED POST] In this sponsored article, Eric Herzog, CMO of Infinidat, suggests that as part of a transformative effort to bring one’s company into the AI-enhanced future, it’s an opportunity to leverage intelligent automation with RAG to create better ….

Embracing the Future: Generative AI for Executives

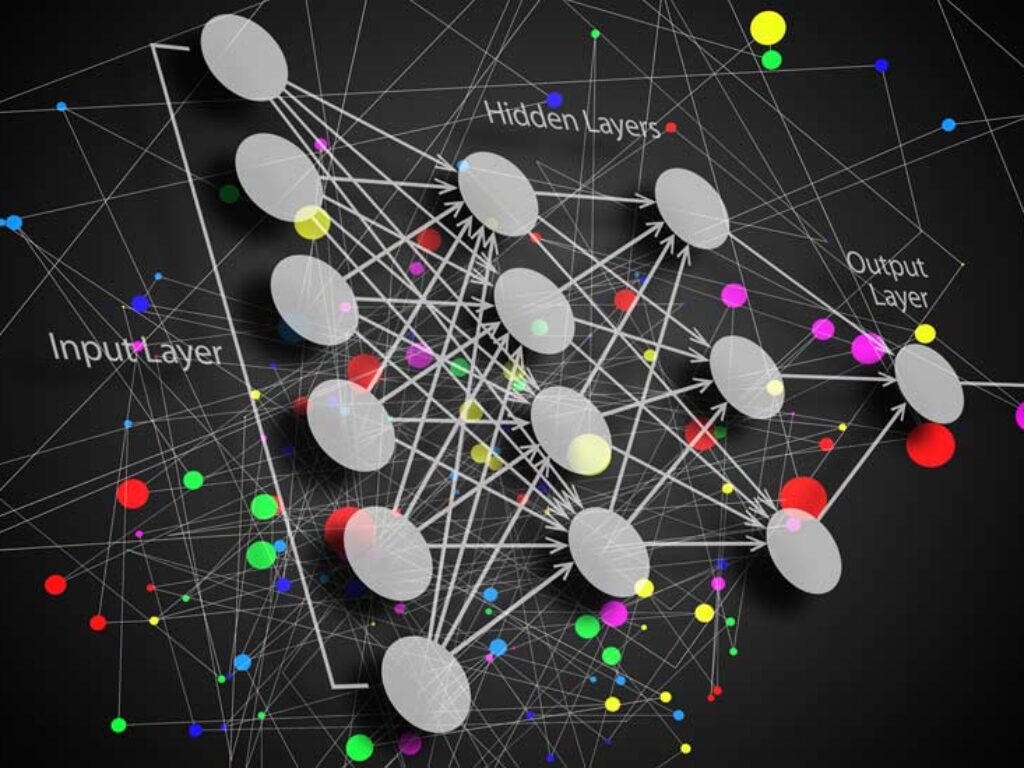

In this feature article, Daniel D. Gutierrez, insideAInews Editor-in-Chief & Resident Data Scientist, believes that as generative AI continues to evolve, its potential applications across industries are boundless. For executives, understanding the foundational concepts of transformers, LLMs, self-attention, multi-modal models, and retrieval-augmented generation is crucial.

Opaque Systems Extends Confidential Computing to Augmented Language Model Implementations

In this contributed article, editorial consultant Jelani Harper discusses how Opaque Systems recently unveiled Opaque Gateway, a software offering that broadens the utility of confidential computing to include augmented prompt applications of language models. One of the chief use cases of the gateway technology is to protect the data privacy, data sovereignty, and data security of organizations’ data that frequently augments language model prompts with enterprise data sources.

Overcoming the Technical and Design Hurdles for Proactive AI Systems

In this contributed article, George Davis, founder and CEO of Frame AI, howlights how we find ourselves at an early, crucial stage in the AI R&D lifecycle. Excitement over AI’s potential is dragging it into commercial development well before reliable engineering practices have been established. Architectural patterns like RAG are essential in moving from theoretical models to deployable solutions.

2024 Trends in Data Technologies: Foundation Models and Confidential Computing

In this contributed article, editorial consultant Jelani Harper suggests that perhaps the single greatest force shaping—if not reshaping—the contemporary data sphere is the pervasive presence of foundation models. Manifest most acutely in deployments of generative Artificial Intelligence, these models are impacting everything from external customer interactions to internal employee interfaces with data systems.

What is a RAG?

In this contributed article, Magnus Revang, Chief Product Officer of Openstream.ai, points out that In the Large Language Model space, one acronym is frequently put forward as the solution to all the weaknesses. Hallucinations? RAG. Privacy? RAG. Confidentiality? RAG. Unfortunately, when asked to define RAG, the definitions are all over the place.