San Francisco – June 27, 2025 – CTGT, which enables enterprises to deploy AI for high-risk use cases, announced today an upgrade to its platform designed to remove bias, hallucinations and other unwanted model features from DeepSeek and other open source AI models. In testing, CTGT was proven to enable DeepSeek to answer 96 percent of sensitive […]

SambaNova Reports Fastest DeepSeek-R1 671B with High Efficiency

Palo Alto, CA – Generative AI company SambaNova announced last week that DeepSeek-R1 671B is running today on SambaNova Cloud at 198 tokens per second (t/s), “achieving speeds and efficiency that no other platform can match,” the company said. DeepSeek-R1 has reduced AI training costs by 10X, but its widespread adoption has been hindered by […]

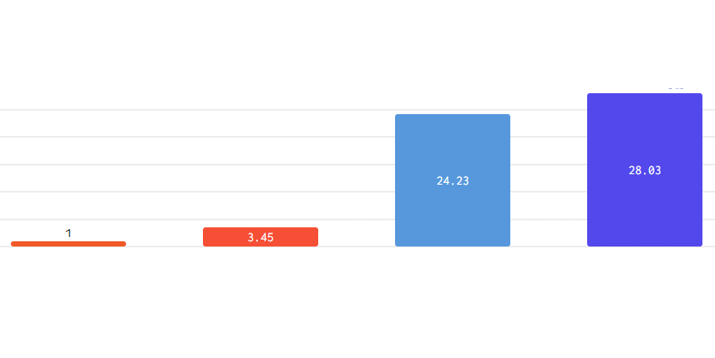

Cerebras Reports Fastest DeepSeek R1 Distill Llama 70B Inference

Cerebras Systems today announced what it said is record-breaking performance for DeepSeek-R1-Distill-Llama-70B inference, achieving more than 1,500 tokens per second – 57 times faster than GPU-based solutions. Cerebras said this speed enables instant reasoning capabilities ….

News Bytes Podcast 20250203: DeepSeek Lessons, Intel Reroutes GPU Roadmap, LANL and OpenAI for National Security, Nuclear Reactors for Google Data Centers

Happy February to one and all! The HPC-AI world was upended last week by AI benchmark numbers from DeepSeek, as the dust settles we offer a brief commentary on what, at this stage, it may mean ….