In this contributed article, Chief Operating Officer at Flashtalking and Mediaocean, Ben Kartzman, discusses how advancements in contextual intelligence and GenAI are transforming creative personalization in digital advertising amid diminishing data signals like Mobile Ad IDs and third-party cookies. Ben points out that AI-driven improvements enable more accurate targeting and tailored ad creative, while GenAI facilitates the real-time creation of new ad assets, leading to unprecedented personalization. He argues that these advancements are crucial in the evolving landscape of digital advertising, redefining the traditional approach of delivering relevant messages to audiences.

Improvements in Contextual Intelligence and Generative AI will Help Creative Personalization Reach its Full Potential

Heard on the Street – 5/9/2024

Welcome to insideAI News’s “Heard on the Street” round-up column! In this regular feature, we highlight thought-leadership commentaries from members of the big data ecosystem. Each edition covers the trends of the day with compelling perspectives that can provide important insights to give you a competitive advantage in the marketplace.

AI Fuels Nearly 30% Increase in IT Modernization Spend, Yet Businesses Are Unprepared for Growing Data Demands, Couchbase Survey Reveals

Couchbase, Inc. (NASDAQ: BASE), the cloud database platform company, released the findings from its seventh annual survey of global IT leaders. The study of 500 senior IT decision makers found that investment in IT modernization is set to increase by 27% in 2024, as enterprises look to take advantage of new technologies, such as AI and edge computing, while meeting ever-increasing productivity demands.

Is Generative AI Having Its Oppenheimer Moment?

In this contributed article, Manuel Sanchez, Information Security and Compliance Specialist at iManage, discusses how Generative AI – which burst into the mainstream a little over a year ago – seems to be having an Oppenheimer moment of its own.

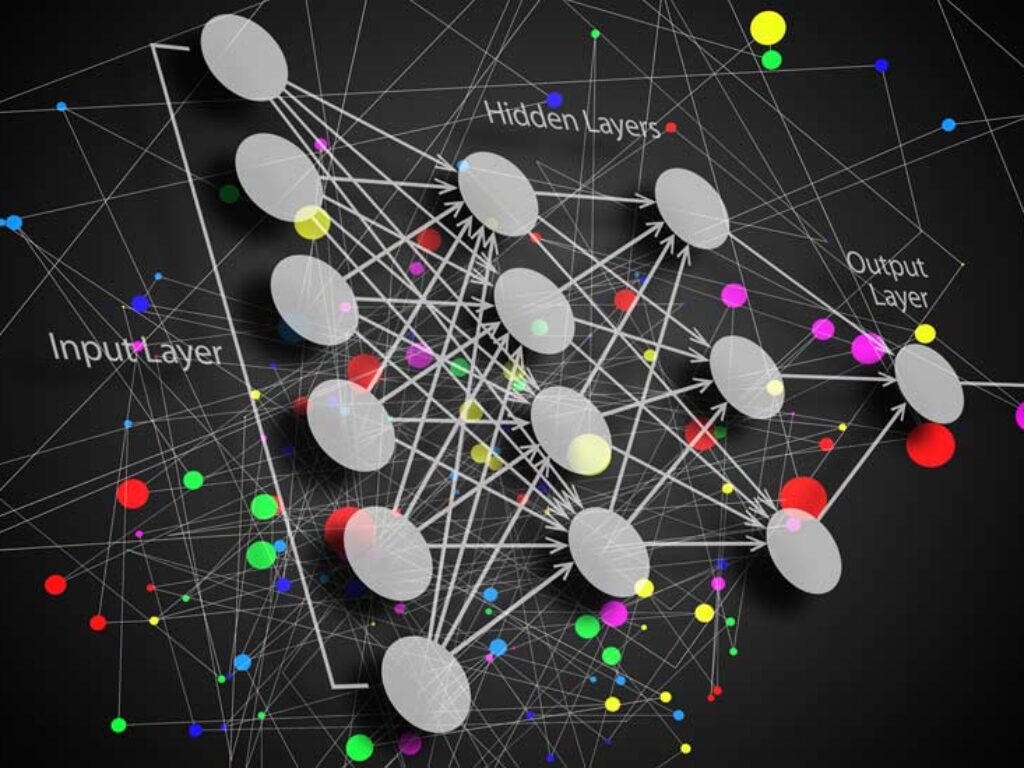

Big AIs in Small Devices

In this contributed article, Luc Andrea, Engineering Director at Multiverse Computing, discusses the challenge of integrating increasingly complex AI systems, particularly Large Language Models, into resource-limited edge devices in the IoT era. It proposes quantum-inspired algorithms and tensor networks as potential solutions for compressing these large AI models, making them suitable for edge computing without compromising performance.

In 2024, Data Quality and AI Will Open New Doors

In this contributed article, Stephany Lapierre, Founder and CEO of Tealbook, discusses how AI can help streamline procurement processes, reduce costs and improve supplier management, while also addressing common concerns and challenges related to AI implementation like data privacy, ethical considerations and the need for human oversight.

The Importance of Protecting AI Models

In this contributed article, Rick Echevarria, Vice President, Security Center of Excellence, Intel, touches on the growing importance of protecting AI models and the data they contain, as this data is often sensitive, private, or regulated. Leaving AI models and their data training sets unmanaged, unmonitored, and unprotected can put an organization at significant risk of data theft, fines, and more. Additionally, poorly managed data practices could result in costly compliance violations or a data breach that must be disclosed to customers.

Why Integration Data is Critical for Powering SaaS Platforms’ AI Features

In this contributed article, Gil Feig, co-founder and CTO of Merge, discusses how integration data can support AI features and why without successful product integrations, successful AI companies would not exist.

Rockets: A Good Analogy for AI Language Models

In this contributed article, Varun Singh, President and co-founder of Moveworks, sees rockets as a fitting analogy for AI language models. While the core engines impress, he explains the critical role of Vernier Thrusters in providing stability for the larger engine. Likewise, large language models need the addition of smaller, specialized models to enable oversight and real-world grounding. With the right thrusters in place, enterprises can steer high-powered language models in the right direction.

Unveiling Jamba: AI21’s Groundbreaking Hybrid SSM-Transformer Open-Source Model

AI21, a leader in AI systems for the enterprise, unveiled Jamba, the production-grade Mamba-style model – integrating Mamba Structured State Space model (SSM) technology with elements of traditional Transformer architecture. Jamba marks a significant advancement in large language model (LLM) development, offering unparalleled efficiency, throughput, and performance.