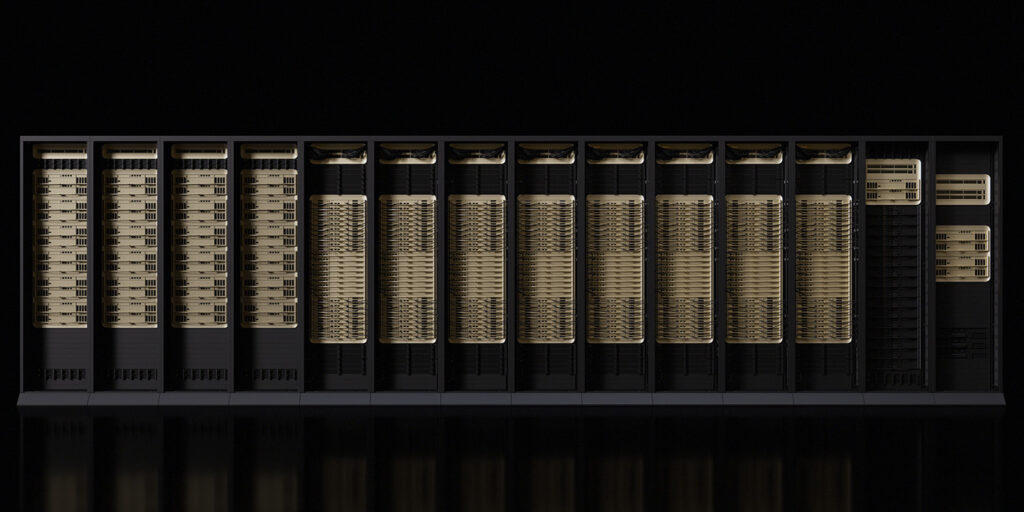

NVIDIA Vera Rubin platform

Those who anticipated NVIDIA CEO Jensen Huang would delay delivering an update on its next big AI chip — the Vera Rubin processor first discussed last March at the company’s GTC conference in San Jose — until the upcoming GTC conference in March were surprised last night when Huang released details about the chip last night at CES in Las Vegas, saying the new chip is in “full production” and will be available the second half of this year.

Among NVIDIA’s hallmarks tat differ from tech company behavior of the past is to deliver new products on time or ahead of schedule, while pursuing a roadmap free of the fear of “cannibalism,” the concern that new products will eat into potential revenue of existing products still on the market. While NVIDIA may, indeed, not have squeezed every dollar out of Vera Rubin’s predecessors, the company’s red-hot product cadence has put enormous pressure on its competitors while also delivering massive volumes of chips to a market sector with constant demand for the latest-and-greatest chips regardless of how rapidly they’re rolled out: the hyperscalers and AI cloud companies.

Of Vera Rubin, Huang positioned it last night as a blow-out performer, delivering 5x the AI compute of the current Grace Blackwell flagship chip.

NVIDIA said the Rubin platform uses extreme codesign across six chips — the NVIDIA Vera CPU, NVIDIA Rubin GPU, NVIDIA NVLink 6 Switch, NVIDIA ConnectX-9 SuperNIC, NVIDIA BlueField-4 DPU and NVIDIA Spectrum-6 Ethernet Switch — that together cut training time and inference token costs, according to the company.

“Rubin arrives at exactly the right moment, as AI computing demand for both training and inference is going through the roof,” said Huang. “With our annual cadence of delivering a new generation of AI supercomputers — and extreme codesign across six new chips — Rubin takes a giant leap toward the next frontier of AI.”

Named for astronomer Vera Florence Cooper Rubin, the platform features the NVIDIA Vera Rubin NVL72 rack-scale solution and the NVIDIA HGX Rubin NVL8 system.

NVIDIA said the platform introduces five innovations, including the latest generations of NVIDIA NVLink interconnect technology, Transformer Engine, Confidential Computing and RAS Engine, as well as the NVIDIA Vera CPU.

“These breakthroughs will accelerate agentic AI, advanced reasoning and massive-scale mixture-of-experts (MoE) model inference at up to 10x lower cost per token of the NVIDIA Blackwell platform,” the company said in its announcement. “Compared with its predecessor, the NVIDIA Rubin platform trains MoE models with 4x fewer GPUs to accelerate AI adoption.”

Jensen Huang

Vera Rubin is designed to address the emerging adoption of agentic AI and reasoning models, which are pushing the limits of computation. Multistep problem-solving requires models to process, reason and act across long sequences of tokens. The Rubin platform’s five technologies include:

- Sixth-Generation NVIDIA NVLink: Delivers GPU-to-GPU communication required for MoE models. Each GPU offers 3.6TB/s of bandwidth, while the Vera Rubin NVL72 rack provides 260TB/s — which NVIDIA said is more bandwidth than the entire internet. With built-in, in-network compute for collective operations, as well as newfeatures for serviceability and resiliency, NVLink 6 switch is built for AI training and inference at scale.

- Vera CPU: Designed for agentic reasoning, Vera is the most power‑efficient CPU for large-scale AI factories, NVIDIA said. It is built with 88 NVIDIA custom Olympus cores, Armv9.2 compatibility and ultrafast NVLink-C2C connectivity.

- Rubin GPU: Featuring a third-generation Transformer Engine with hardware-accelerated adaptive compression, Rubin GPU delivers 50 petaflops of NVFP4 compute for AI inference.

- Third-Generation NVIDIA Confidential Computing: The company said Vera Rubin NVL72 is the first rack-scale platform to deliver NVIDIA Confidential Computing — which maintains data security across CPU, GPU and NVLink domains.

- Second-Generation RAS Engine: The Rubin platform features health checks, fault tolerance and proactive maintenance. The rack’s modular, cable-free tray design enables up to 18x faster assembly and servicing than Blackwell.

NVIDIA Rubin introduces NVIDIA Inference Context Memory Storage Platform, which the company said is a new class of AI-native storage infrastructure designed to scale inference context at gigascale.

Powered by NVIDIA BlueField-4, the platform enables sharing and reuse of key-value cache data across AI infrastructure, designed to improve responsiveness and throughput.

As AI factories increasingly adopt bare-metal and multi-tenant deployment models, maintaining strong infrastructure control and isolation becomes essential. BlueField-4 also introduces Advanced Secure Trusted Resource Architecture, or ASTRA, a system-level architecture that gives AI infrastructure builders a single control point to provision, isolate and operate large-scale AI environments without compromising performance.

With AI applications evolving toward multi-turn agentic reasoning, AI-native organizations manage and share larger volumes of inference context across users, sessions and services. NVIDIA Vera Rubin NVL72 is designed to offer a unified system that combines 72 NVIDIA Rubin GPUs, 36 NVIDIA Vera CPUs, NVIDIA NVLink 6, NVIDIA ConnectX-9 SuperNICs and NVIDIA BlueField-4 DPUs.

With AI applications evolving toward multi-turn agentic reasoning, AI-native organizations manage and share larger volumes of inference context across users, sessions and services. NVIDIA Vera Rubin NVL72 is designed to offer a unified system that combines 72 NVIDIA Rubin GPUs, 36 NVIDIA Vera CPUs, NVIDIA NVLink 6, NVIDIA ConnectX-9 SuperNICs and NVIDIA BlueField-4 DPUs.

NVIDIA said it will also offer the NVIDIA HGX Rubin NVL8 platform, a server board that links eight Rubin GPUs through NVLink to support x86-based generative AI platforms. The HGX Rubin NVL8 platform accelerates training, inference and scientific computing for AI and high-performance computing workloads.

NVIDIA DGX SuperPOD serves as a reference for deploying Rubin-based systems at scale, integrating either NVIDIA DGX Vera Rubin NVL72 or DGX Rubin NVL8 systems with NVIDIA BlueField-4 DPUs, NVIDIA ConnectX-9 SuperNICs, NVIDIA InfiniBand networking and NVIDIA Mission Control software.