It’s often said that supercomputers of a few decades ago pack less power than today’s smart watches. Now we have a company, Tiiny AI Inc., claiming to have built the world’s smallest personal AI supercomputer that can run a 120-billion-parameter large language model on-device — without cloud connectivity, servers or GPUs.

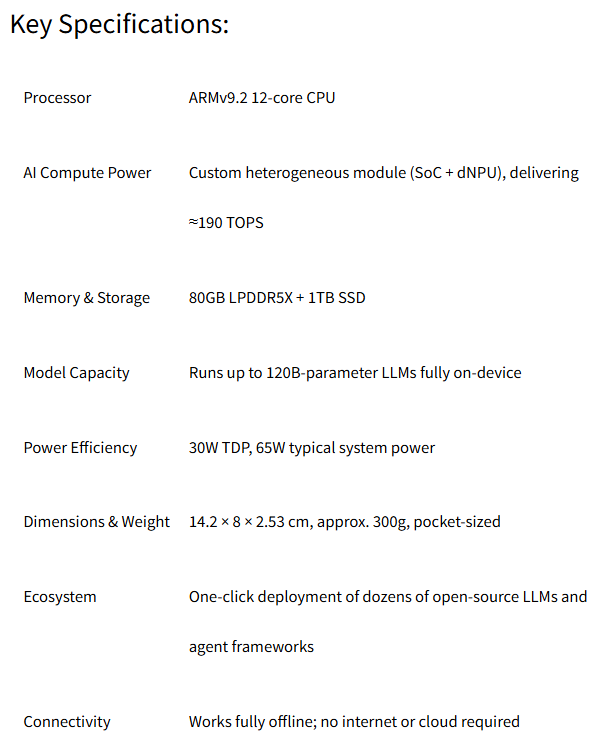

The company said the Arm-based product is powered by two technology advances that make large-parameter LLMs viable on a compact device.

- TurboSparse, a neuron-level sparse activation technique, improves inference efficiency while maintaining full model intelligence.

- PowerInfer, an open-source heterogeneous inference engine with more than 8,000 GitHub stars, accelerates heavy LLM workloads dynamically distributing computation across CPU and NPU, enabling sever-grade performance at a fraction of traditional power consumption.

Designed for energy-efficient personal intelligence, Tiiny AI Pocket Lab runs within a 65W power envelope and enables large-model performance at a fraction of the energy and carbon footprint of traditional GPU-based systems, Tiiny said.

The company said the bottleneck in today’s AI ecosystem is not computing power — it’s dependence on the cloud.

“Cloud AI has brought remarkable progress, but it also created dependency, vulnerability, and sustainability challenges,” said Samar Bhoj, GTM Director of Tiiny AI. “With Tiiny AI Pocket Lab, we believe intelligence shouldn’t belong to data centers, but to people. This is the first step toward making advanced AI truly accessible, private, and personal, by bringing the power of large models from the cloud to every individual device.”

“The device represents a major shift in the trajectory of the AI industry,” the company said. “As cloud-based AI increasingly struggles with sustainability concerns, rising energy costs, global outages, the prohibitive costs of long-context processing, and growing privacy risks, Tiiny AI introduces an alternative model centered on personal, portable, and fully private intelligence.”

The device has been verified by Guinness World Records under the category The Smallest MiniPC (100B LLM Locally), according to the company.

The company calls itself a “U.S.-based deep tech AI startup,” while its announcement has a Hong Kong dateline. The company was formed in 2024 and brings together engineers from MIT, Stanford, HKUST, SJTU, Intel, and Meta, AI inference and hardware–software co-design. Their research has been published in academic conferences including SOSP, OSDI, ASPLOS, and EuroSys. In 2025, Tiiny AI secured a multi-million dollar seed round from leading global investors according to the company.

Tiiny AI Pocket Lab is designed to support major personal AI use cases, serving developers, researchers, creators, professionals, and students. It enables multi-step reasoning, deep context understanding, agent workflows, content generation, and secure processing of sensitive information — even without internet access. The device also provides true long-term personal memory by storing user data, preferences, and documents locally with bank-level encryption, offering a level of privacy and persistence that cloud-based AI systems cannot provide.

Tiiny AI Pocket Lab operates in the ‘golden zone’ of personal AI (10B–100B parameters), which satisfies more than 80 percent of real-world needs, according to the company. It supports models scaling up to 120B LLM, delivering intelligence levels comparable to GPT-4o. This enables PhD-level reasoning, multi-step analysis, and deep contextual understanding — but with the security of fully offline, on-device processing.

The company said the device supports one-click installation of popular open-source models including OpenAI GPT-OSS, Llama, Qwen, DeepSeek, Mistral, and Phi, and enables deployment of open-source AI agents such as OpenManus, ComfyUI, Flowise, Presenton, Libra, Bella, and SillyTavern. Users receive continuous updates, including official OTA hardware upgrades. The above features will be released at CES in January 2026.