By Shahar Belkin, Chief Evangelist at ZutaCore

By Shahar Belkin, Chief Evangelist at ZutaCore

The compute power required by AI and HPC is skyrocketing and driving a global transition from 10-15 megawatt data centers to 50-100 megawatt and even gigawatt AI factories. With the next generation AI superchips operating at 2,800 watts and beyond, the amount of heat expected to be generated by a single data center is off the charts.

State of the Cooling Market – Air vs. Liquid, or Both?

A data center using only air cooling needs 1 watt of cooling for every watt of computing. That means 50 percent of their power is going to cooling! But with liquid cooling, every watt of cooling supports 10 watts of computing. And in terms of power usage effectiveness (PUE), while air-based cooling delivers PUE of approximately 1.5, liquid cooing can cut that to 1.1 and 1.04 or lower. A shift from 1.5 to 1.1 represents enormous savings. Put another way, the same power consumption using direct on-chip liquid cooling will support 75 percent more compute.

This is why analysts estimate the liquid cooling market will grow from $5.65 billion in 2024 to $48.42 billion by 2034.

Liquid Cooling 101: Direct-to-Chip vs. Immersion

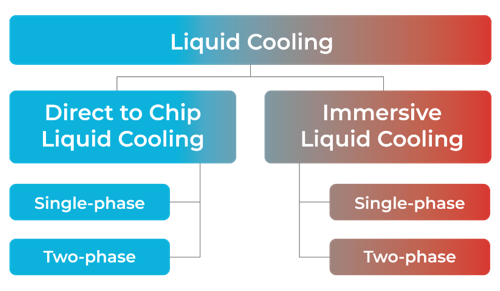

There are several types of liquid cooling technologies, which fall under two categories: immersion and direct-to-chip.

Direct-to-chip is commonly referred to as “cold plate” cooling because it uses cold plates that sit on top of the GPU or CPU, versus immersion cooling that submerges the servers, chips and other equipment into tanks of fluid.

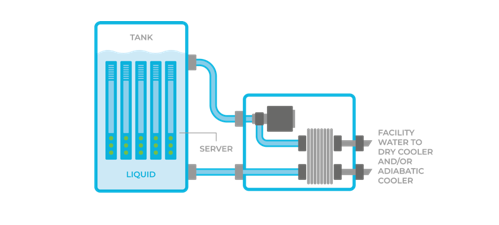

With single-phase immersion, servers and other IT equipment are immersed in an oily fluid in a tank, and as the CPU or GPU heats up, the fluid absorbs the heat. This heated fluid rises to the top of the tank and is then pumped to a heat exchange unit that cools the fluid and sends it back to the tank, as shown below:

The advantage is that it can take 100 percent of the heat off from the server. However, it is limited to cooling lower power chips (500 watts and lower) because the oil is slow to rise to the top of the tank to be pumped for cooling. In addition, the oil is potentially flammable at high temperatures, and because it touches all the components, it can reduce the lifetime of the equipment. And it requires heavy maintenance.

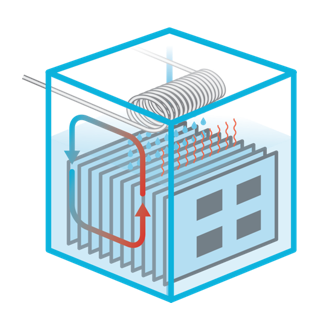

Two-phase immersion also submerges servers and IT equipment in tanks. Compared to single-phase, the difference is that it uses low boiling temperature, dielectric fluid instead of oil. As the component on the board heats up, it boils the fluid, which creates vapor that rises from the liquid to the top of the tank, where there is a network of tubs flowing cooled facility water. The vapor from the tank touching the cold tubs condenses and drips back into the tank.

In single-phase immersion, servers and IT equipment are submerged in fluid encased in large anks.

The advantage is that the dielectric fluid will not short circuit the components and servers like water will. The downside is that it requires significant data center infrastructure investment because large and heavy tanks are required to house the equipment.

In addition, for equipment to be immersed in the tank, all component

s must be compatible with the dielectric liquid, so it’s not damaged by the fluid itself. This requires specialized equipment or a modification to servers. Maintenance is also an issue because two-phase often involves long down times with the use of cranes to take the servers out of the tanks.

Like single-phase immersion, two-phase immersion also can remove 100 percent of the heat. However, this process involves boiling the dielectric fluid in the tanks that are also housing all the server equipment. As a result, material from the motherboard and other equipment is routinely ‘boiled off.” This can be detrimental to the lifetime of equipment and as the material comes off, it needs to be continually filtered, requiring large and expensive filters, and regular maintenance. This also is detrimental to the environment because when a tank is opened, dielectric liquid is sent into the atmosphere.

Direct-to-Chip Liquid Cooling

Direct-to-chip cooling brings cooling liquid to a cold plate placed directly on top of the high heat flux components, such as CPUs and GPUs. This liquid removes heat from the components and is contained in the cold plate and does not come in contact with the chips or other server components.

There are two types of direct-to-chip liquid cooling: single phase and two phase. Both methods use cold plates – which do not change the server and rack design. It only involves replacing the air-based heat sink for a cold plate on top of the CPU or GPU.

In two-phase immersion, vapor rises from the liquid to the top of the tank.

Single-phase direct-to-chip cooling uses water or water glycol mix as the coolant in the cold plate. Water remains in a liquid state and the ability to take away heat with this method is dependent on water flow. The higher the power of the chip that needs to be cooled, the more water flow is required. This requires the investment of larger pipes, tubs and connectors as well as power-hungry pumps to continually carry the water through the system.

The challenge with this approach is the risk of water leakage and corrosion. With servers approaching the $300K range, a single leak can be catastrophic, not to mention the cost of a downed plant operation. In addition, over time, water is corrosive and also can lead to mold, residue, and other biological growths. The water must be continually filtered, maintained and tested to make sure it is balanced, adding to the maintenance expense.

A limitation with single-phase direct-to-chip liquid cooling is that heat removed depends on water flow. The hotter the chips, the more water is required. Using this approach for a 1000-watt chip, a data center would need to flow 1.2-1.5 liters per minute. With the latest GPUs in the area of 1.5 kilowatts, that means water flow in every cold plate would need to be two liters per minute. When GPU power passes the 2,000-watt threshold, a gallon per minute flow will be needed in the cold plate. As we approach the gigawatt data centers, the requirement for so much water flow makes this approach less effective and requires high pressure in the flexible tubs that can lead to water leaks in the servers.

Unlike singe-phase direct-to-chip, two-phase direct-to-chip does not require the flow of liquid and in fact, uses no water in the cold plate. Inside the server and cold plate is a heat transfer fluid that is 100 percent safe for IT equipment. The heat from GPUs and CPUs boils the heat transfer fluid at low temperature, absorbing the heat, an efficient phase change physical phenomena keeping the chip at a constant temperature.

This is similar to the way boiling water keeps the bottom of a pot at 100⁰C, only in this case using the heat transfer fluid, at a lower temperature. As the liquid inside the cold plate boils, the liquid in the cold plate never passes the boiling temp even if the heat increases by 3X (such as with higher power GPUs and CPUs). This makes this technique highly scalable for cooling higher power chips of the future. To understand how this ‘pool boiling’ approach works, see this instructional video.

Two-phase direct-to-chip liquid cooling requires little to no data center infrastructure changes, just a simple installation process. It is also fairly low maintenance because the dielectric fluid does not need to be filtered, balanced or replaced. And unlike immersion, it does not get released into the atmosphere during server and rack maintenance.

Hotter Chips Are Coming – Are you Ready?

While chips of over 2,500 watts are not expected until the end 2025, data centers and AI factories are being preparing for their arrival. Many hyperscalers are shying away from water because it poses too much risk. Even insurance companies are making their concerns known because insuring for a water leak could be a huge expense. Aside from this, there is also pressure to make the infrastructure scalable so that it can handle hotter chips as they become available, while also being sustainable, energy efficient, and cost-effective for the long-term.

Knowing all this, is your data center ready?

Shahar Belkin is chief rvangelist at ZutaCore, a direct-to-chip liquid cooling solutions company.