Isolated render passes of a 3D character model (3dwally.com)

By Chris Zacharias, CEO, Imgix

Imagine an AI capable of transforming a single photograph into a living, breathing scene. Change the lighting, the weather, or even the camera angle with just a few clicks.

This is not a distant dream; it is the future of generative imaging AI — and its foundation lies in an unlikely ally: game engines.

As natural data sources reach their limits, game engines offer an abundant supply of synthetic data, enabling AI to achieve breakthroughs in digital imaging.

The Synthetic Data Imperative

At the 2024 Conference on Neural Information Processing Systems (NeurIPS), Ilya Sutskever, co-founder of OpenAI, famously stated, “Data is the fossil fuel of AI. We’ve achieved peak data and there will be no more. … We have but one internet.”

This means the natural data we rely on to train models is finite and has already been extensively mined. We must turn to synthetic data — data generated through computation and simulation.

The computer graphics industry has spent decades developing tools that excel at creating synthetic data. Technologies like Unity 3D, Unreal Engine, Blender and Maya are not just tools for creating video games and animations. They are engines of innovation, capable of generating highly detailed, controllable synthetic environments that can provide the precise data needed to train AI systems effectively.

Why Game Engines?

Game engines are uniquely suited for several reasons:

- Game engines allow creators to manipulate every aspect of a synthetic environment. Lighting, shadows, textures, and even physical phenomena like water and fire can be meticulously controlled. This precision enables AI to learn complex relationships between these elements without interference from extraneous variables.

- Generating diverse datasets is critical for training AI models that generalize well. Game engines can create countless permutations of scenes, objects, and environments in real-time, providing a virtually infinite supply of training data.

- Game engines calculate and store data in channels, such as depth maps, reflection maps, and shadow maps. These layers can be isolated or combined, helping AI models understand how different phenomena interact. For example, by turning shadows on and off in a synthetic scene, a model can learn the principles of shadow formation and application—something impossible to achieve with natural data alone.

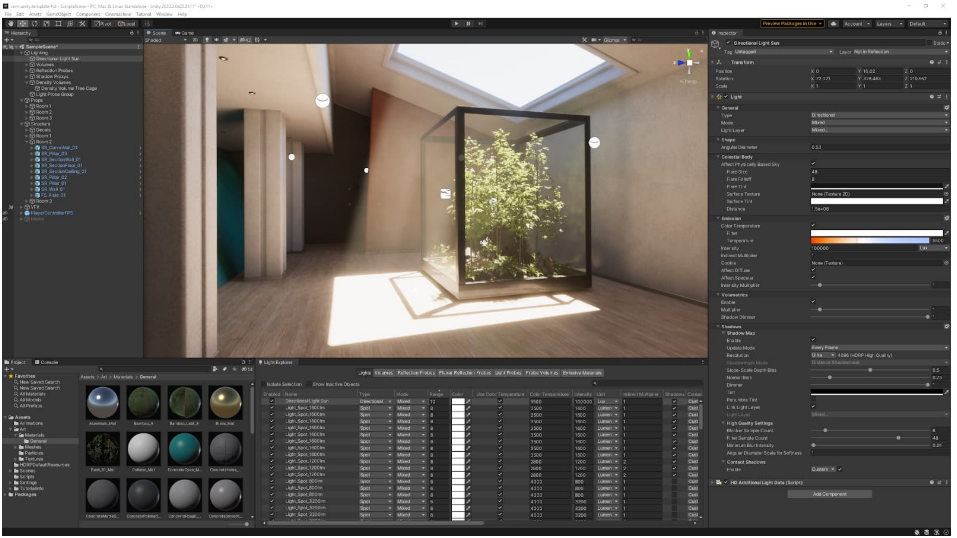

The Unity 3D development environment (unity.com)

From Memory Constraints to New Possibilities

The origins of synthetic data generation in computer graphics stem from necessity. Early computers lacked the memory to store high-resolution natural data, forcing developers to create textures, lighting, and other visual elements mathematically. Over the decades, this has evolved into an art and science. Today, game engines can simulate real-world phenomena like caustics, translucency, and erosion with astonishing accuracy.

These advancements are a goldmine for generative AI. By leveraging synthetic environments, researchers can bypass many of the challenges associated with natural data, such as noise, unpredictability, and labor-intensive collection processes. Instead, they can focus on tailoring data to specific AI training objectives, accelerating progress exponentially.

The ultimate goal of training a generative AI model is generalization — to understand underlying principles and apply them creatively to new scenarios. Models that fail to generalize risk either memorizing their training data or hallucinating implausible outputs, such as a human hand with eight fingers.

Game engines address this challenge in two key ways:

- Focused Training Data: Synthetic environments allow researchers to create datasets that emphasize specific features or phenomena, guiding the model’s learning process.

- Diversity: By overwhelming the model with diverse inputs, game engines force it to learn the fundamental structures and patterns underlying the data.

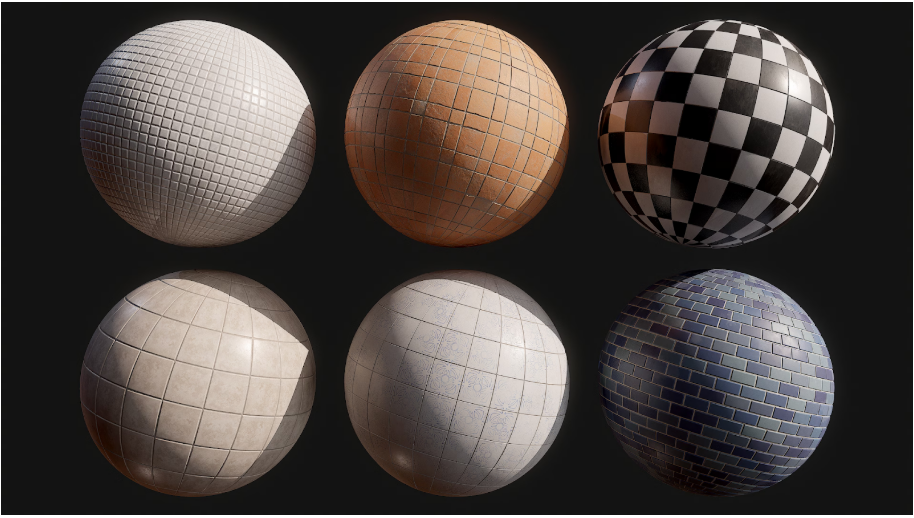

Procedural materials of floor tilings made in Substance Designer (unrealengine.com)

A generalized model forms an understanding of the “guiding” truths of the world it is operating in, much like a traditional artist does. An artist starts by sketching basic shapes, adding perspective lines, and gradually layering in detail to ultimately reach a final drawing. This internalized mindset enables the artist to draw anything, even things they have never seen before firsthand. Similarly, generative AI models trained with synthetic data develop a conceptual understanding of their domain, enabling them to imagine and create beyond their training data.

Risks and Mitigations

However, synthetic data is not without risks. Synthetic environments can sometimes be too “perfect,” lacking the randomness and imperfections of the real world. For example, zooming into a synthetic texture might reveal its mathematical underpinnings rather than the organic complexity of natural data.

To mitigate these risks, researchers can:

- Blend Synthetic and Natural Data: Combining the strengths of both ensures that models remain grounded in reality while benefiting from the scalability of synthetic environments.

- Introduce Imperfections: Adding noise, randomness, and other real-world imperfections can help models learn to handle edge cases and anomalies.

The Future of Generative Imaging

The next frontier for generative imaging lies in embedding game engines directly into AI training pipelines. Today, we render images and videos from game engines to use as training data. In the future, AI models could interact with game engines in real-time, dynamically exploring and manipulating synthetic environments to expand their latent domains.

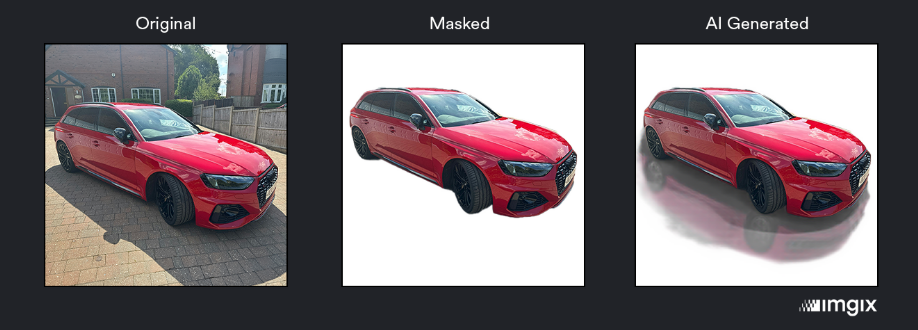

AI-generated shadows and reflections model built using synthetic data (imgix.com)

This capability could transform industries. Imagine a photographer capturing a single image and using AI to restage the scene entirely — altering lighting, poses, or even the weather. Filmmakers could shoot rough drafts of scenes knowing that generative AI will refine their vision into a polished masterpiece. Such advancements promise to democratize creativity, empowering individuals and small teams to achieve results that rival those of large production houses.

By harnessing these tools, we can create vast amounts of synthetic data, accelerate AI training, and push the boundaries of what’s possible in digital imaging. The synergy between generative AI and game engines will not only redefine industries but also democratize creative expression, enabling anyone with a vision to bring it to life. As we stand on the brink of this new era, the possibilities are as limitless as the synthetic worlds we can imagine.

Chris Zacharias is founder and CEO of Imgix, a company creating the world’s largest image processing pipeline. Imgix processes more than 8 billion images every day, empowering its customers to unlock the value of their image assets.