Production-grade Mamba-style model offers unparalleled throughput , only model in its size class that fits 140K context on a single GPU

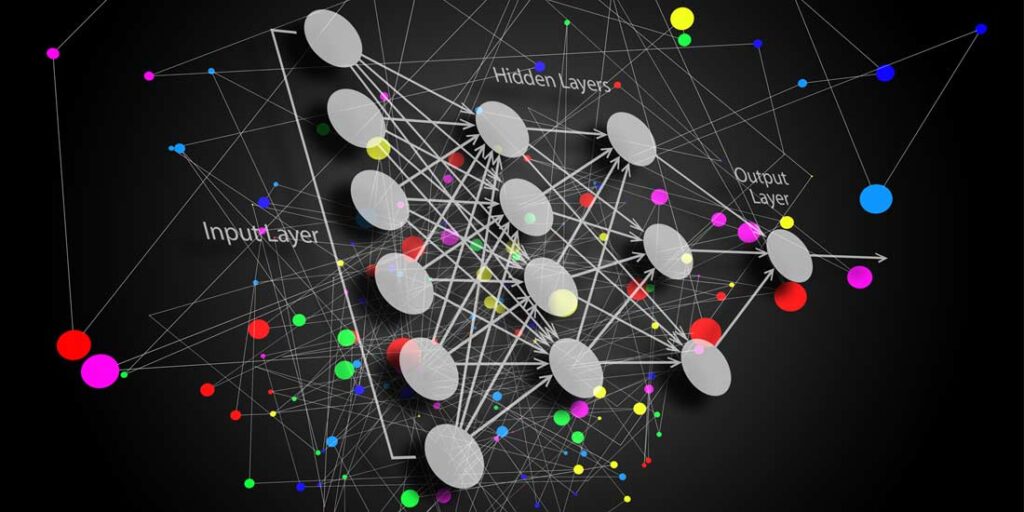

AI21, a leader in AI systems for the enterprise, unveiled Jamba, the production-grade Mamba-style model – integrating Mamba Structured State Space model (SSM) technology with elements of traditional Transformer architecture. Jamba marks a significant advancement in large language model (LLM) development, offering unparalleled efficiency, throughput, and performance.

Jamba revolutionizes the landscape of LLMs by addressing the limitations of pure SSM models and traditional Transformer architectures. With a context window of 256K, Jamba outperforms other state-of-the-art models in its size class across a wide range of benchmarks, setting a new standard for efficiency and performance.

Jamba features a hybrid architecture that integrates Transformer, Mamba, and mixture-of-experts (MoE) layers, optimizing memory, throughput, and performance simultaneously. Jamba also surpasses Transformer-based models of comparable size by delivering three times the throughput on long contexts, enabling faster processing of large-scale language tasks that solve core enterprise challenges.

Scalability is a key feature of Jamba, accommodating up to 140K contexts on a single GPU, facilitating more accessible deployment and encouraging experimentation within the AI community.

Jamba’s release marks two significant milestones in LLM innovation – successfully incorporating Mamba alongside the Transformer architecture plus advancing the hybrid SSM-Transformer model, delivering a smaller footprint and faster throughput on long context.

“We are excited to introduce Jamba, a groundbreaking hybrid architecture that combines the best of Mamba and Transformer technologies,” said Or Dagan, VP of Product, at AI21. “This allows Jamba to offer unprecedented efficiency, throughput, and scalability, empowering developers and businesses to deploy critical use cases in production at record speed in the most cost-effective way.”

Jamba’s release with open weights under the Apache 2.0 license explores collaboration and innovation in the open source community, and invites further discoveries from them. And Jamba’s integration with the NVIDIA API catalog as a NIM inference microservice streamlines its accessibility for enterprise applications, ensuring seamless deployment and integration.

To learn more about Jamba, read the blog post available on AI21’s website. The Jamba research paper can be accessed HERE.

Sign up for the free insideAI News newsletter.

Join us on Twitter: https://twitter.com/InsideBigData1

Join us on LinkedIn: https://www.linkedin.com/company/insidebigdata/

Join us on Facebook: https://www.facebook.com/insideAI NewsNOW