Foundation models have surged to the fore of modern machine learning applications for numerous reasons. Their generative capabilities—including videos, images, and text—are unrivaled. They readily perform a multiplicity of tasks, as epitomized by the utility of Large Language Models (LLMs) in everything from transcription to summarization.

But most importantly, they’re readily repurposed from one dataset, application, and domain to another, without requiring undue time, financial resources, and energy.

Applying these models to related tasks—such as employing a model designed to classify cars to classify vans—is known as fine-tuning. The capacity for organizations to deploy foundation models for mission critical enterprise use cases ultimately hinges on model fine-tuning, which is vital for building applications with them.

According to Monster API CEO Saurabh Vij, “Most developers either want to access foundation models, or fine-tune them for domain specific tasks, or for their own custom datasets.”

In addition to expediting the time required to repurpose foundation models for a developer’s own particular needs, model fine-tuning furthers the democratization of data science. Contemporary solutions in this space utilize codeless fine-tuning options in cloud platforms (which include both GPUs and foundation models on demand) that are accessible through APIs.

Users can quickly avail themselves of pre-trained models, fine-tune them for their specific use cases, and broaden the accessibility and impact of advanced machine learning throughout the enterprise.

Model Fine-Tuning

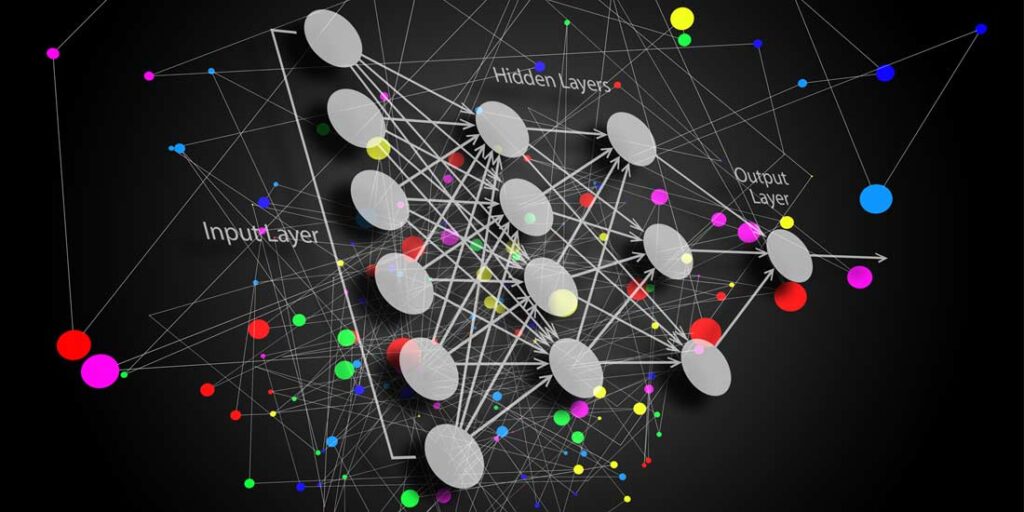

Conceptually, model fine-tuning is one of the many forms of transfer learning in which “you’re taking a pre-trained model and then you’re now re-training it for another task,” Vij explained. “The best part is you don’t need to spend hundreds of thousands of dollars to do this. You can do this for 200 to 300 dollars.” When building an object detection model from scratch to identify cars, for example, users would have to give it hundreds of thousands, if not millions, of examples for its initial training.

However, to apply that model to a similar task like recognizing trucks, one could simply fine-tune it and avoid lengthy (and costly) re-training from scratch. “What we understand is there is so many common features, like headlights, the structure, wheels,” Vij noted. “All those things are similar for both use cases. So, what if we could just remove one or two layers of the car’s model and add new layers for the trucks? This is called fine-tuning.”

Parameter-Efficient Fine-Tuning

There is an abundance of approaches to fine-tuning foundation models. Parameter-Efficient Fine-Tuning is one such method that involves training fewer parameters while rectifying typical constraints pertaining to communication and storage. When asked about the cost-effective method of fine-tuning mentioned earlier, Vij characterized it as “a type of transfer learning, which is Parameter-Efficient Fine-Tuning, and one of the techniques is LoRA, which is quickly becoming an industry standard.”

Low-Rank Adaption (LoRA) is renowned for its ability to reduce costs and increase efficiency for fine-tuning machine learning models. Some of the reasons fine-tuning can become expensive (when other approaches are invoked) is because “deep learning models are very large models and they have a lot of memory requirements,” Vij commented. “There’s a lot of parameters in them and their weights are very heavy.”

LoRA is emerging as a preferrable technique for fine-tuning these models, partially because it’s an alternative to full fine-tuning. According to Vij, “With LoRA, what happens is you don’t need to fine-tune the entire model, but you can fine-tune a specific part of the model, which can be adapted to learn for the new dataset.” LoRA enables users to quickly fine-tune models to identify the salient traits from a new dataset—without building a new model. The computational paradigm underpinning LoRA is partly based on reductions, which improves its overall efficiency. “We’re using this approach because lots of computations reduce a lot,” Vij revealed.

Foundation Models

Once they adopt the proper techniques, organizations have the latitude to fine-tune several foundation models to meet their enterprise AI needs—particularly if they require generative capabilities. Some of the more useful models include:

- Stable Fusion: Stable Fusion is an image generation model. Nonetheless, organizations can engineer it via natural language prompts. “Out-the-box, it can create multiple images,” Vij confirmed. “It has been trained on a large dataset of text-image pairs so that it can understand the context for the text prompts you provide.”

- Whisper AI: This speech-to-text model transcribes audio files. “It’s also adding a little more functionality, such as sentiment analysis and summarization,” Vij added. “But Whisper AI’s specific focus is on transcription, so any audio can be transcribed.” This model provides transcriptions in up to 30 different languages.

- LLaMA and StableLM: Large Language Model Meta AI (LLaMA) is a collection of LLMs that are widely used for text-to-text applications. “You write an instruction and it generates an output for you,” Vij remarked. StableLM is an open source alternative.

The Bottom Line

Whether it’s some iteration of GPT, LLaMA, or any other foundation model, the enterprise merit obtained from successfully employing these constructs revolves around the ability to fine-tune them. LoRA and other Parameter-Efficient Fine-Tuning approaches produce this result in a manner that’s cost-effective and efficient—particularly when accessed through no-code cloud frameworks supplying GPUs and access to the models.

“In 2022, you had around 60,000 fine-tuned models,” Vij reflected. “But over the past seven to eight months now, that number has grown to 200,000, and we can anticipate this growth to reach 1,000,000 models in one year.”

About the Author

Jelani Harper is an editorial consultant servicing the information technology market. He specializes in data-driven applications focused on semantic technologies, data governance and analytics.

Sign up for the free insideAI News newsletter.

Join us on Twitter: https://twitter.com/InsideBigData1

Join us on LinkedIn: https://www.linkedin.com/company/insidebigdata/

Join us on Facebook: https://www.facebook.com/insideAI NewsNOW